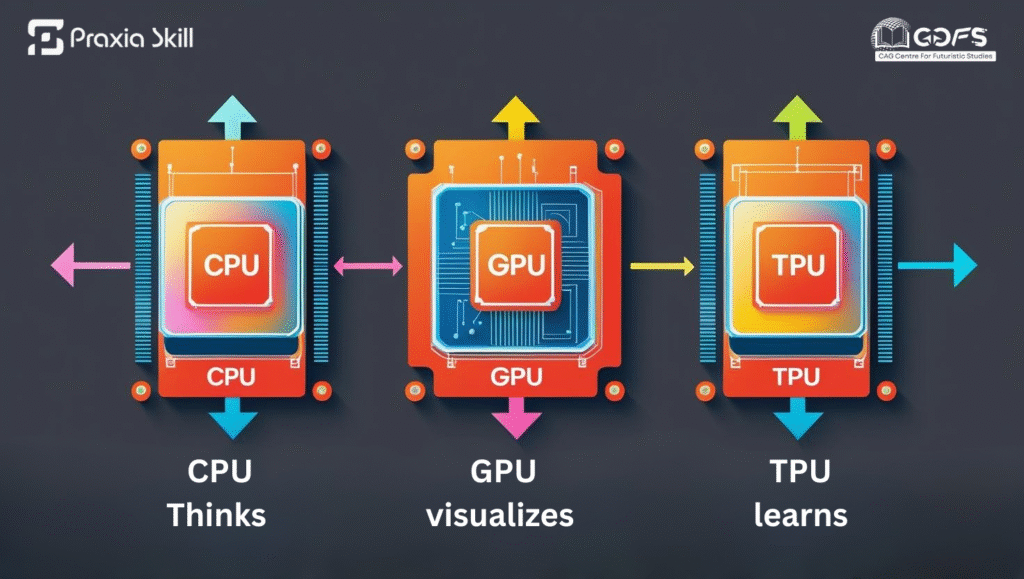

In today’s digital age, powerful processors are the engines behind everything we do—from browsing the web and gaming to the complex world of artificial intelligence. While you’ve likely heard of a CPU, you may not be as familiar with a GPU or a TPU. Understanding the key differences between these three processors can help you choose the right technology, whether you’re a gamer, a content creator, or an aspiring AI developer. Let’s break down what each one does in a clear, easy-to-understand way.

-

The CPU: Your Computer’s Brain

Think of the Central Processing Unit (CPU) as the brain of your computer. It’s the general-purpose workhorse that handles most of your daily tasks, such as running your operating system, launching programs, and managing simple calculations.

- How it works: A CPU processes tasks sequentially, meaning it tackles one instruction after another. It uses a few powerful cores to get the job done with precision.

- Best for: Everyday tasks like web browsing, writing documents, and light gaming.

The CPU is like a skilled chef who meticulously prepares one dish at a time.

-

The GPU: The Parallel Powerhouse

A Graphics Processing Unit (GPU) was originally designed to render graphics for video games. Its true power lies in its ability to perform thousands of calculations simultaneously, a process known as parallel processing. This makes it perfect for tasks that involve a massive amount of data.

- How it works: Unlike a CPU, a GPU has thousands of smaller cores working in unison. This architecture allows it to handle multiple operations at the same time.

- Best for: High-end gaming, 3D rendering, video editing, and complex tasks like AI deep learning and cryptocurrency mining.

A GPU is like a huge kitchen with hundreds of chefs all working on different parts of a massive banquet simultaneously.

-

The TPU: The AI Specialist

The Tensor Processing Unit (TPU) is a specialized chip developed by Google specifically for machine learning and artificial intelligence tasks. It’s engineered to be exceptionally fast at the kind of math that neural networks rely on.

- How it works: A TPU is optimized for tensor operations, the mathematical foundation of AI. This design allows it to accelerate the training and inference of deep learning models.

- Best for: AI applications like image recognition, natural language processing, recommendation systems, and large-scale AI research, especially within cloud environments.

A TPU is like a high-tech machine in that kitchen, custom-built to make one specific dish incredibly fast and efficiently.

Quick Comparison: CPU vs. GPU vs. TPU

| Feature | CPU | GPU | TPU |

| Architecture | A few powerful cores | Thousands of smaller cores | Specialised AI-focused cores |

| Processing Type | Sequential processing | Parallel processing | AI-optimized processing |

| Best For | General computing | Graphics, AI training | Machine learning |

| Speed | Slower for parallel tasks | Very fast for parallel tasks | Extremely fast for AI tasks |

| Cost | Generally, more affordable | Can be expensive | Often accessed via cloud services |

| Use Cases | Office work, OS operations | Gaming, video editing, and AI | Cloud-based AI/ML workloads |

When to Choose Each Processor

- Choose a CPU if you need an all-rounder for general-purpose computing, everyday applications, and versatility.

- Choose a GPU if your work involves gaming, 3D rendering, video production, or training deep learning models.

- Choose a TPU if you are focused on large-scale AI and machine learning, particularly within cloud computing environments.

Wrap-Up:

Think of CPUs, GPUs, and TPUs as three different superheroes in the tech universe.

- CPUs are the all-rounders — the reliable heroes who can handle just about anything you throw at them.

- GPUs are the multitasking speed demons — perfect for jaw-dropping visuals and AI wizardry.

- TPUs are the AI specialists — laser-focused on making machine learning lightning fast.

And the story doesn’t end here. With technology racing ahead, new “heroes” are bound to join the lineup — each smarter, faster, and more powerful, ready to take computing to places we haven’t even imagined yet.